FollowMee along OpenPaths

"Give. Me. The data."

That's what many Quantified Self adherents said back in 2011 when Apple admitted that they accidentally had their phone save a database of geopositional data over recent past months. Apple promised to delete most of the database in the next update.

That bug didn't sound bad to me, it sounded awesome! I grabbed that database as quickly as I could, and looked for a service to continue the geoposition scraping so I could implement a location predictor.

The service OpenPaths appeared quickly on the scene. It's a project from NYTLabs. It's free, and a major benefit is that:

Users can securely store and manage their personal location data, and grant researchers access to portions of that data as they choose.

That's quite the magnanimous service! Neither the users who run the app nor the researchers who gather the data are paying anything for the service. We're users, but we're not paying customers. The NYTLabs must be running it out of the goodness of their own hearts, and the users and researched are indebted to them.

The downside became apparent pretty quickly. As there are no paying customers, the service doesn't get a lot of monitoring or attention. API calls can take a minute or more. Sometimes they fail, and sometimes the service simply goes down for days. The only appeal we can make as users are emotional appeals. We have no financial leverage.

@openpathscc @nytlabs I only bother you to revive https://t.co/g1HY2sWqNQ and its service because I think you and it are awesome + valuable.

— David Blume (@dblume) May 22, 2015Given that I'm not the customer of OpenPaths, but my data is the product, I went searching for a redundant service where I am the customer. I came across FollowMee, another app with the same feature set. It's a paid service, and the developer is responsive on their bulletin board. I've installed it as a backup and potential replacement for OpenPaths.

There's a saying that generally holds true of online services:

If you're not the customer, you're the product.

That's why I'm running both the free service OpenPaths, to share my geopositional data with researchers, and the paid service FollowMee, for some assurance the service will work while I pay for it. I'll continue to run FollowMee along OpenPaths while they both do their respective jobs.

Photo by Axiraa / CC BY-NC-ND 2.0

Ways to Break a Dollar into Change

I'm going to retire a technical interview question that I've had ready to ask, but turns out to probably be too difficult. It's more a wizard-level interview question, or a casual technical discussion with peers who don't feel like they're under the gun.

How many ways are there to break a U.S. dollar into coins?

It seemed appropriate to ask this to some candidates, because once you know what you're trying to do, the algorithm in Python naturally reduces down to five or six lines of code.

def ways_to_break(amount, coins):

this_coin = coins.pop(0)

# If that was the last coin, only one way to break it.

if not coins:

return 1

# Sum the ways to break with the smaller coins.

return sum([ways_to_break(amount - v, coins[:])

for v in range(0, amount+1, this_coin)])

print ways_to_break(100, [100, 50, 25, 10, 5, 1])

You could click on the gist with more comments and better variable names if you want to try to better understand that. It's not meant to be production code, it's a whiteboard snippet made runnable.

Here's what I hoped to look for, from the candidate: After maybe clarifying what I wanted, ("what about the silver dollar or half-dollar coins? Did you want permutations or combinations?"), the problem should seem well-defined, but hard. Then, I'd hope the candidate would break it down to the most trivial cases: "How many ways are there to break 5¢ if you have pennies and nickels? How about 10¢ with pennies, nickels and dimes?" Can these trivial cases be used to compose the bigger problem?

At that point, different areas of expertise would appear. Googlers might start jumping towards MapReduce, and already figure that Reduce is simply the summation function because the question is "how many", and have the thing worked out at scale.

Imperative programmers, having thought about the simplest cases, probably clue in that there's an iterative approach and a recursive approach.

Pythonistas who prefer functional programming probably have reduce(lambda x, y: x+y [x, y in ...]) ready to go.

That got me thinking. I didn't see the need for reduce and operator.add and enum. My solution above isn't above criticism, but I think it's easier to read than some of the alternatives. In truth, it really depends on who that reader is, and how their experience has conditioned them.

While writing the algorithm, I searched the question online, and was pleasantly surprised to see Raymond Hettinger mentioned for solving the puzzle. He's a distinguished Python core developer. Too bad he probably didn't have Python then, in 2001, to help him solve the problem in a scant few lines.

Taking it up a Notch

Adam Nevraumont proposed the following challenge:

Drop the penny, and try to break $1.01. The Canadian penny has been retired, after all.

He provided the answer: Check that the remaining coin can break the amount. Don't assume it can. This also relieves the restriction that the container of denominations be ordered!

def ways_to_break(amount, coins):

this_coin = coins.pop()

# If that was the only coin, can it can break amount?

if not coins:

if amount % this_coin:

return 0

else:

return 1

# Sum the ways to break with the other coins.

return sum([ways_to_break(amount - v, coins[:])

for v in range(0, amount+1, this_coin)])

print ways_to_break(100, [1, 100, 10, 50, 25, 5])

(It's actually more efficient to pop the largest denominations first. But I wanted to show that you can use an unordered container now.)

Photo by Ian Britton / CC BY-NC 2.0

The Smallest GitHub Fork

Not gonna bury the lede: I forked a project in GitHub to change a color in a colorscheme. That's basically a tiny one-word change.

I changed jobs a few months ago, and I have the longest commute yet. I far prefer working in the office, but I don't want to have to be in the office to resolve any little emergency. So I set up my environment to be able to work from home.

First, there's access to work's VPN. Absolutely no problem there. That's the first thing everybody needs to work from home.

Then, just in case I need to see my work computer's desktop, there's VNC. I got close, but no cigar.

![]()

I set up a server, and can connect via multiple clients, but they all have the same blitting problem when it comes to launching applications. No big deal, there's another way to skin that cat.

If I can't see my work computer's desktop, I can still run its GUI applications via X11 Forwarding. That's pretty good, but those apps always look a little weird on the client side.

Then there's the last resort: good old ssh with a text-based editor. And in my case, that's vim. Low-bandwidth, and gets most of the jobs done.

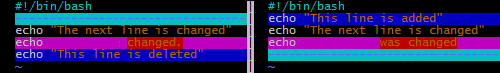

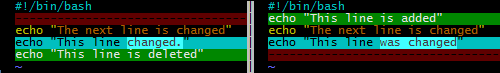

There was a problem even with that last resort. In the color scheme I use, sometimes words would become invisible when running vimdiff. It depends on the context coloring of the source files. In the following screenshot, there are words in the fuschia line that we can't see.

It's an easy-enough problem to fix. The normal thing to do is to find the configuration file, desert.vim, and change the offending color to not be fuschia. Then you're done, your problem is solved.

But I'm a developer, and to my mind, this stinks of a bug. And if it affects me, it could affect others, too. So the right thing to do is to fix the bug in a public fork, and possibly share the fix with a pull request.

I found the original repository, and made my fork. I fixed the bug. Text on the changed lines would now be visible. While I was in there, a couple of other changes wanted to be made. I made deletions muted red, and additions green. But without a screenshot, visitors wouldn't know how the change looks. So I made the screenshots.

(Both screenshots here look a bit garish. The screenshots don't reflect what a real vimdiff would look like. In practice the improved scheme works much better for me.)

Since I now had the screenshots, I updated the README file to show the effect of the change in place.

I committed my changes, and thought to myself, "isn't it interesting the lengths developers will go to, to fix little problems and document those fixes? And how a tiny fix can grow into something larger?" I oughtta blog about it.

Dad's Project in the Garage

There's a trope about the dad who works constantly on his favorite old piece-of-junk project in the garage. He may never get it running, but getting it running is not the only point of the project. A bigger point is that it's the thing he goes to to clear his head and recharge. It's something that he can focus intently on, and it's something that's entirely in his domain.

I have a number of slow-burning projects at home, too. But they're not in the garage, they're in the cloud. Usually, they're web services. At work, as part of a team, I write code that goes on embedded devices. But at home, the entire product is mine. It's my chance to be a "full stack" engineer, the CEO, and principal customer all at once. It's nice to take on these different roles.

Updating a File

Let's take a quick look at updating a file. It's one of the most useful and common operations in programming, so it's really very well understood. A naive Python snippet to update a file with new data would look like the following:

with open(filename, 'wb') as f:

f.write(data)

What's beautiful about it is that it's cross-platform, and Python's "with" statement takes care of closing the file that was opened, even if an error occurs when writing the data.

But when you deploy code into the real world, you have to dive deeper, and think about what really can go wrong. For example, the automatic closing of the file I mentioned above? Python flushes data to the file, but doesn't necessarily sync the file to the physical disk. And in my case, it really does have to be cross platform, and I can only have one process access the file at a time, and I don't want IO errors corrupting the data. That means finding a cross-platform file lock, doing all the work on the side, and atomically moving the side file to the production file. The following snippet (elaborated a little more at this gist) fixes those problems.

with filelock.FileLock(filename):

with tempfile.NamedTemporaryFile(mode='wb',

dir=ntpath.dirname(filename),

delete=False) as f:

f.write(data)

f.flush()

if platform.system() == "Windows":

os.fsync(f.fileno()) # slower, but portable

if os.path.exists(filename):

os.unlink(filename) # or else WindowsError

else:

os.fdatasync(f.fileno()) # faster, Unix only

tempname = f.name

os.rename(tempname, filename) # Atomic on Unix

On the one hand, it's no longer a two-liner. On the other hand, I've got a function that works in all the environments I need it to, and I know exactly how it's going to behave in any exceptional situation.

Removing a Photo from a Web Album

My latest project is a web-based photo album. I could pay Flickr, Google or Apple for their web albums, of course, but they've made UX changes I don't like, and their costs are too high. So, I'm writing my own. It'll never be as polished as the production photo albums, but it'll be my jalopy, and I'll be able to tweak it in any way I choose.

One of the things my album owners need to do is delete photos they no longer want in the album. So, how should I implement that?

os.unlink(photo_filename)

That's the command to delete a file. You know it's not going to be that easy. Since we're talking about a web album, we'd want to consider a few things. We want an ideal user experience. It has to be fast, they have to be able to change their minds later, and concurrency can't be a problem. Here are some of the steps involved in deleting a photo from the album.

- Use JavaScript to make them confirm their choice in their Browser.

- Schedule the deletion of the entry in the local metadata file. It'll be done in a side thread or at a later time. Just make sure the user's web page's data refreshes quickly.

- Add a new entry to a list of timestamped-files-to-be-deleted-in-a-month.

- Run a cronjob that works on a regular cycle that actually does timestamp checking and the physical file deletion. (It's actually an S3 key deletion, which gets propagated to multiple cloud static-file servers behind the scenes. Even more remote complexity is encapsulated behind a single function call.)

What almost looked like it'd be a one-line call turns into JavaScript, AJAX, worker-thread creation, data file manipulation, and a cronjob with its own health and status reporting scheme.

This is fun! Amirite? This is what working on your own personal project is all about. So let's dive a little more deeply into step 2 above.

When the user confirms deletion of a photo, the first thing that should happen is that they get feedback. The photo needs to disappear from their view immediately. This happens before the server actually deletes the photo from persistent storage. JavaScript can manipulate the DOM right away in their browser. Over on the server, let's say you want to remove a photo's filename and its metadata from a list of photos.

Removing an Item from a Container

The task of removing the photo data from a list can be simplified to the task of removing a record from a container (l) when the first field of that record matches a certain criteria (s).

There's the loop:

for item in l:

if item[0] == s:

l.remove(item)

break

That's a straight forward imperative language neutral non-Pythonic implementation. Iterate across the container until you find the item, and then delete it right away. Unfortunately, l.remove(item) is another O(n) function being called inside an iteration of the list. That can be fixed with the enumerate call:

for idx,item in enumerate(l):

if item[0] == s:

del l[idx]

break

This is better. The enumerate() call returns an index that allows us to use the del call which takes only O(1) time.

Although that'd work, let's try to find a more modern and efficient solution. Use the enumerate call in a generator that returns the index of the item to be deleted:

idx = next((i for i,v in enumerate(l) if v[0] == s), None)

if idx is not None:

del l[idx]

The generator call is very efficient, and maybe we're done. No we're not! You see, it's a trick question. The actual answer is:

DELETE FROM l WHERE f = s LIMIT 1;

When it's your own project, you can change the domain! The data never had to be in a container that Python could process. Why not store it in a SQL database? Or, hey, just for grins, why not keep it in Python, but why was the container accessed like an unordered list? Was it ordered? Then do a binary search.

idx = bisect.bisect_left(l, [s, ])

if idx != len(l) and l[idx][0] == s:

del l[idx]

Nice, O(log n). Or, wait. Maybe it doesn't have to be ordered. Each photo has a unique filename. That's a key. Each photo's data could be a record in a Python set. Python has a "set" container that resembles mathematical sets with all the performance features you'd hope for. So let's make "l" be a set, and "r" be the row that has s in it, then...

l.remove(r)

Yay, that'll usually occur in constant order time! So have we found the best solution?

Of course not. We can make the theoretical problem as simple as we like. But in practice, the web album sometimes wants a database with different primary keys, sometimes it wants an ordered list of items, and sometimes it only needs a set of unique items.

For that matter, sometimes my user will be on a desktop computer, sometimes they'll be on a tablet, and often they'll be on their phone. That's a lot of CSS to experiment with.

That's what fiddling with your own personal project is all about! Dive deeply into whichever problem piques your interest at that moment. Make something work better. Even if the rest of the world doesn't know why you bother. Sometimes it's just what you need to be doing.

Photo by tashland / CC BY-NC-ND 2.0

Why I Returned My Chromebook

I've long been a "true believer" in remote computing. I didn't distinguish between an internet cloud and a single remote server. What mattered to me was that all I needed near me was some sort of device to send and receive data. The smaller and thinner the local device, the better.

Ever since I'd heard that John Gage, the Chief Researcher and Vice President of the Science Office for Sun, say that "the network is the computer", I thought that the future was clear. He and I both knew where we were headed.

As soon as the first Chromebook came out, I was interested. But I didn't buy. When I spend my own discretionary money, I'm merely cutting edge, as opposed to bleeding edge. So I waited...

When ChromeOS vesion 19 and the Samsung Series 5 550 came out, it was time for me to buy. I figured they'd gotten most of the kinks out, and the device would be eminently usable.

The Chromebook arrived and I loved it. The laptop itself didn't disappoint at all. The battery life, the screen, the keyboard and touchpad - all of it was great.

I returned the Chromebook because of small missing features in ChromeOS. I knew that ChromeOS didn't support Java. I should have known that ChromeOS wouldn't support QuickTime. I could have lived with those two missing features. The third missing feature: ChromeOS doesn't support SSL VPN solutions. That means I can't even use my Chromebook for connecting to my work's network.

So, I couldn't run Java applications. I couldn't watch PATV, and I couldn't work from home. It's with a heavy heart that I realize that the state of technology isn't quite there yet, and I returned the Chromebook.

Dear Google: I'm still watching and hoping. Get to the point where I can access the networks I need on your portable network device, and you'll get my money. You're so close.

Entries

Entries