My TechCrunch Feed Filter

It's the the sixth anniversary1 of my TechCrunch Feed Filter. In Internet time, that's ages. A lot has changed. Here's a look back.

The Problem

TechCrunch was a great blog about innovation and entrepreneurship. As it grew, it published more articles than I cared to read. Like many savvy blog readers, I used a feed reader to present the latest articles to me, but TechCrunch was simply too profuse.

The Solution

I created a service that'd visit TechCrunch's feed, and make note of who made which articles, what the articles were about, how many comments each article had, and how many Diggs2, Facebook likes and Facebook shares each article had.

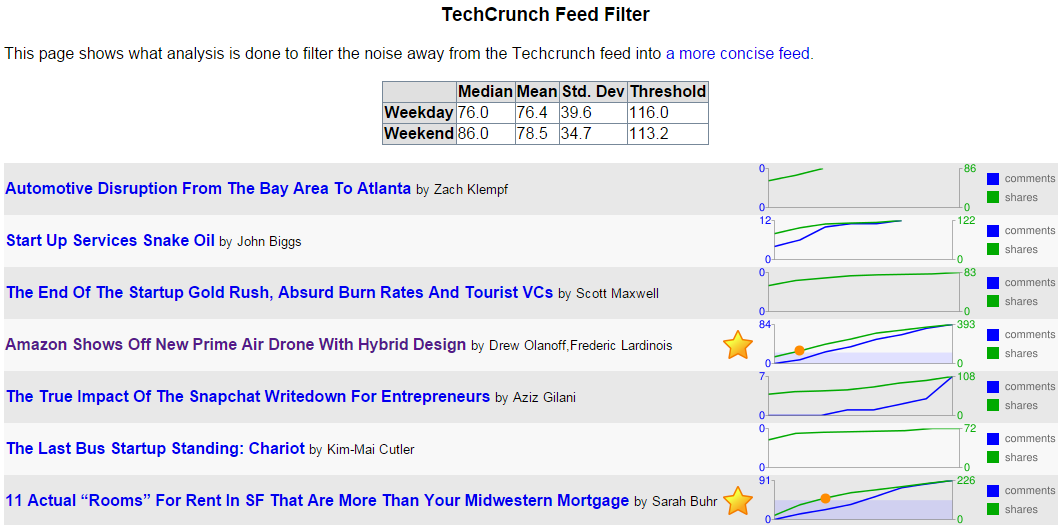

With that data, the service would determine the median, mean, standard deviation, and create a minimum threshold for whether the article merited being seen by me. The raw data is stored in a live yaml file. There were some special rules, like, "If the article is by Michael Arrington, or has "google" in the tags field, automatically show it to me." Otherwise, other readers had to essentially vote the article high enough for it to pass the filter.

In the picture above, you can see that two posts out of seven met the criteria to be in the filtered feed. They're the ones with the gold stars. The threshold was calculated to be 116 shares, and you can see in the graph when each article had more than the threshold. (There's a red circle at the point the green shares line rose above the blue area that designates the criteria level.)

Once the service knew which posts were worthy of my attention, it listed them in its own filtered feed.

Changes over Time

In the beginning, TechCrunch used WordPress's commenting system. As such, its feed included the slash:comments tag. At the time, that was the best metric of how popular a TechCrunch post was, better than Facebook shares. But TechCrunch started experimenting with different commenting systems like Disqus and Facebook comments to combat comment spam. Neither of those systems used a standard mechanism to get comment counts, so every time it changed commenting systems, I had to change my service.

Digg, whose Diggs were once a great metric of the worthiness of a TechCrunch blog post, faded away. So I had to stop using Diggs.

So that left Facebook's metrics. They weren't ideal for assessing TechCrunch articles, but they were all that was left. Using Facebook likes and shared worked for a while. And then Facebook changed their APIs! They once had an API, FQL, that let you easily determine how many likes and shares an article had. The killed that API, leaving me with a slightly more complicated way to query the metrics I need for the service to do its work.

Not The End

I've had to continuously groom and maintain the feed filter over these past six years as websites rise, fade, and change their engines. And I'll have to keep doing so, for as long as I want my Feed Filter to work. But I don't mind. It's a labor of love, and it saves me time in the long run.

1 One of the original announcement posts.

2 Remember Digg? No? Young'un. I still use their Digg Reader.

Backup Solutions: Choosing rsync.net

In June 2013, I investigated various online backup solutions, and decided on a hybrid solution: Use the free 5GB of iCloud and then backup the rest to DreamObjects.

That didn't work out.

- boto-rsync only uses file-size to decide to update a file. There'd be too many false negatives for my comfort level.

- I was still using DreamHost Backup which uses rsync proper, and the first 50GB were free. At that price, it was irresistible.

- If I used duplicity, I wasn't comfortable (yet) with the cost associated with the incremental backups to DreamObjects.

It's a new world. And it became time to revisit my remote backup strategy.

- Windows 10 will support Bash soon! This means I could use the same rsync scripts from each of my devices whether Raspbian, other Linux, Mac OS, or Windows. (And without having to use cygwin!)

- "If you're not the customer, you're the product." I observed this fact when I used OpenPaths, and its service became unavailable. And again while I was backing up to DreamHost Backup, and it was discontinued. No more free remote rsync for me!

And then I discovered rsync.net. I don't know why I hadn't noticed them before, they've been around for years. It turns out that theirs is the service that I've been waiting for.

- I'm the customer. I pay for the storage, so they're accountable to me. I've only heard good things about their customer support.

- And as far as rsync itself is platform agnostic, so is their service. And I'm already using rsync for local backup on all my computers. Setting rsync.net as my remote backup was very easy.

- I already treat confidential data specially, so even on local disk it's encrypted.

I'm really happy with rsync.net, and my Windows environment is going to be even more straightforward when I can use Windows Subsystem for Linux (WSL). The devices I'm still not certain about are the family's phones. Here's what we're doing so far.

- We're doing rare full phone backups to local computers.

- We're using Google Photos apps to sync photos more frequently from the phone to the cloud. (We're Google's product. It's a deal with the devil, I know.)

- I use a different source for Contacts and Calendar data, and the phone is merely a sync target, never a source.

Two oddities that WSL might make better

I have to have two strange lines of code because I use CygWin and rsync to back things up. Applications like PuTTY, which store preferences in the Windows Registry, and FileZilla which store preferences in %APPDATA% need special treatment.

I copy PuTTY's settings to my Documents folder.

regedit /e "%USERPROFILE%\Docs\PuTTY.reg" HKCU\Software\SimonTatham

I backup FileZilla's settings explicitly.

rsync $(cygpath $APPDATA/FileZilla) me@rsync.net:APPDATA/

Who knows? Maybe there'll be another update about my backup strategy in the future.

Photo by Jill Laurie Goodman / CC BY-NC 2.0

Choosing a Cloud-based Backup Solution

Here's a comparison of some potential cloud backup solutions. I'd like to backup some desktop application settings to the cloud, user content from all the members of my family, and content from our mobile devices. It seems like every member of my family has different tastes in music, and we can't stop taking videos and photos.

Dropbox

Dropbox is a great tool, and it solves the problem of storing user content in the cloud. And it's free for the first 2 to 18 GB. (That's why the Dropbox line is blurry. The amount you get for free depends on what you do for them.) But it becomes $10.00 a month after that up to 100GB. and then more after that. And it doesn't backup certain non-Dropbox directories.

Microsoft SkyDrive offers a handy comparison of similar services, and it compares favorably in many cases. But all the services have similar drawbacks with regard to which media get backed up, and how media is shared or not shared across different accounts, each of which has to be paid for individually. By the way, you can check your current Google Drive storage here.

iCloud

For the members of the family that have iOS devices, we could backup to iCloud for free, up to 5GB. I really like that the backups would be effortless. But 5GB isn't very much for our photos, videos, and music nowadays. If we need more space, we could upgrade an iCloud or more, and our devices could share iClouds, but each cloud caps out at 55GB, and who would share which clouds? If our devices share clouds, would they have to sync the same media? That's not really what we want, and it doesn't help me out with my PC backup.

Dreamhost Backup

As a customer of Dreamhost, I get a free-for-the-first 50GB backup plan. That's quite decent. I'm using it already to backup my desktop. I love that the backup is done via rsync over ssh. It's flexible, smart, and encrypts my data on its way to the server in the cloud. But it's a single server in the cloud, and as such, it's a single point of failure. After the first 50GB, it's $0.10 per GB per month.

That's great for the desktop so far. But it doesn't help with the handheld devices unless I have them sync to the desktop, and then have the desktop sync to the cloud. That'd require user action, and that's a point of failure.

DreamObjects

Dreamhost offers high availability space (data is replicated three times, with immediate consistency) in the cloud for effective prices of under $0.07 per GB for developers. As an early adopter, I got in at a promotional rate. For the first 10 GB, DreamObjects isn't the cheapest solution, but after around 60 GB, then DreamObjects becomes a great solution based on price.

DreamObjects don't transfer via ssh, so if I want to encrypt my data, I have to do it myself. For data that doesn't need encryption, I can use boto-rsync which is like rsync. (Note that I linked to a fork that includes the "--exclude" argument.) For data that needs encryption, I'd do it with duplicity.

Of course, it's got the same problem as Dreamhost Backup. It doesn't help with the handheld devices unless I have them sync to the desktop, and then have the desktop sync to the cloud.

The Final Solution

You can't beat free. And you can't beat automatic. While simpler is better, and just choosing one solution would be the simplest, for a cheap developer like me, a hybrid solution looks the most attractive.

Everybody who's got iOS devices will backup the most important type of media that fits into 5 GB per iCloud. After that, we'll have to manually sync our handheld devices to a desktop, and that'll sync with DreamObjects. While I dislike that there'll be a manual step in getting some data into the cloud, I do like that this backup is device independent, and completely within my control.

Implementation Details

From a Linux box, or from an OSX command line, it's even easier than this. But if you're installing into CygWin, assuming you have easy_install installed, here are some installation notes for boto-rsync:

$ easy_install pip $ pip install boto_rsync

A boto-rsync command to DreamObjects looks like this:

$ boto-rsync -a "public_key" -s "secret_key" \ --endpoint objects.dreamhost.com \ --delete ~/dir-to-backup/ s3://bucket/dest-of-backup/

And for Duplicity, you'd need to have installed both librsync1 and librsync-devel from CygWin first. Then:

$ pip install httplib2 oauth $ curl -L http://goo.gl/VBVmB \ > duplicity-0.6.21.tar.gz $ tar xvzf duplicity-0.6.21.tar.gz $ cd duplicity-0.6.21/ $ python setup.py install

A duplicity command to DreamObjects looks like this, after you've configured a .boto file with your credentials:

$ env PASSPHRASE=yourpassphrase \ duplicity ~/dir-to-backup/ \ s3://objects.dreamhost.com/bucket/dest-of-backup

Edit: Here's a follow-up to this post written in 2016.

Finding an RSS Feed Reader to Replace Google Reader

As soon as the shuttering of Google Reader was announced, I went on the hunt for alternatives. I've researched various options, both self-hosted and cloud-based. I've tested them all in parallel for over a week, and have come to a tentative conclusion.

Your time is precious, here's my decision so far: My absolute favorite is selfoss. It's fast, minimal, and looks beautiful in both desktop and mobile formats. It happened to be very easy to install, and had no trouble taking in my OPML file, and it already had the right keyboard navigation keys configured. It mostly worked correctly right out of the box.

There were two settings I changed in the config.ini file:

homepage=unread auto_mark_as_read=1

That sets up the behavior I prefer. I want the site to always start with a list of unread content, and as I navigate around, I like the articles to be automatically marked as read.

Did it say it was minimal? Oh, it is. Gloriously so. And a bit too much. It makes for a very consistent reading experience because it strips away the effect of almost all HTML elements. No embedded videos, many pictures are not displayed, all text is displayed at one size and one weight.

That won't do for me. I want to see a little CSS beautification in my reader. Whitespace between paragraphs and seeing all the video and images is important to me. I need to know when the images are there. So I made some minor changes to the codebase.

- Removed elements like strong, b, em, i and p, from the strip-all-style part of public/all.css.

- I used this technique to remove elements from simplepie's strip_html list. I allowed iframe, object, param and embed in spouts/rss/feed.php for embedded videos.

- I turned off safe and whitelisted the embedded video tags in the htmLawed object in helpers/ContentLoader.php.

- Finally, I made this change from ref= to src= in helpers/ViewHelper.php.

Having made the changes above, now the feeds in my selfoss reader retain some rudimentary style and properly display video and images.

Now that's much better. This is a selfoss installation that I can live with.

As for the runner-ups? I liked Tiny Tiny RSS a lot. But it was slower loading and responding. And the visual presentation for desktop mode wasn't as nice. There's too much clutter. Its mobile version is not supported, but ttrss-mobile is awesome. Install it into /mobile for the easiest experience. Finally, remap they keys j and k to next_article_noscroll and prev_article_noscroll with a plugin.

If I were forced to go with a 3rd party cloud-based product, I'd probably choose Feedly. It's relatively fast and minimal. After that, it's a toss-up between NetVibes and The Old Reader. I didn't pay NewsBlur to see how they'd perform with a moderately large OPML. I'm looking forward to seeing what Digg comes up with.

And as for local desktop clients? They're not in the running. I need my feedreader to be current on any screen I happen to login to.

Finally, some suggest using Twitter as Google Reader's replacement. I enjoy "dipping into the stream" as it were in Twitter, Facebook and Reddit. But I need a tool that'll save articles from my favorite friends and content creators too.

I hope you may find this helpful. If nothing else, it'll serve to pinpoint the state of the art in early 2013 for keeping track of content online.

Happy Birthday, Me. I got you Vim!

I used to play NetHack. The keyboard keys it uses to navigate your player are the same screen navigation keys for the editor vi. So I've always been able to make do with vi and its successor, Vim.

I've recently decided to give Vim a serious look, since it's still ubiquitous after more than 20 years, (I find myself having to ssh to remote computers more often then I expected), and I have really smart, productive colleagues who use it everyday. Somehow, it has stood the test of time. Vim is one of those tools that increases in value exponentially if you invest the time to become proficient in it.

So, in the grand tradition of giving myself strange intangible gifts like giving myself data portability a couple of years ago, this year, I decided to invest some time in Vim.

I'm still new to it, but I've tasted the change in mindset that happens as you get better and better at Vim. It feels like you're programming the act of text editing, and I mean that in the best way possible. (This is coming from a guy who spends his free time programming cron jobs.)

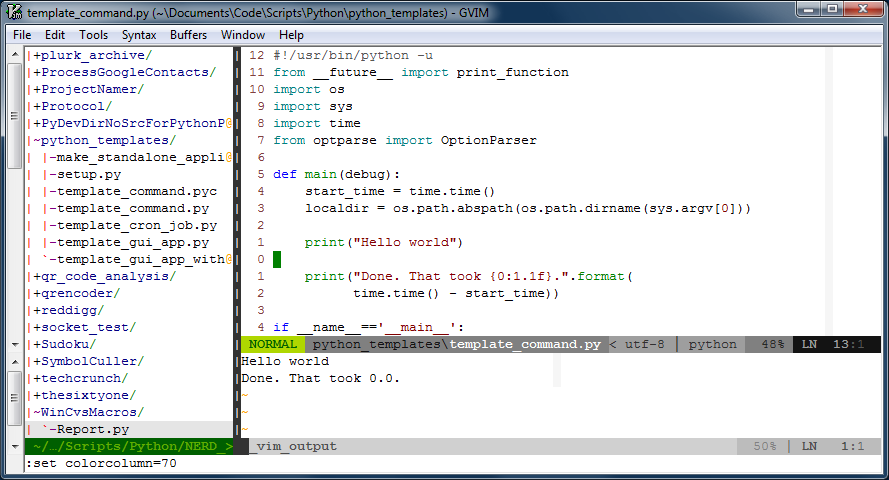

Time for a screen shot of what Vim looks like for me when I'm working on a shell command. Click on it to see it at full size.

There are three "windows" in the image above. The window on the left is a tree-view of the files on disk. That window is managed by a script called, NERDTree, and it's usually closed. I only open that when I need to look at another file. On the right are two windows, one above the other. The larger one is on top, and is the "main" window that hosts the main buffer that I'm actively editing. The smaller window on the bottom is an "output" window, that prints out the results of the script I'm editing whenever I run it.

That image represents an iterative workflow where I edit (possibly multiple buffers) in the biggest window, hit <F5> to run the script, and see the output displayed in the window below. It's a workflow that works well for small scripts.

There are a few things about that window that I'd like to point out:

One:The line numbers are relative to the current position of the cursor. This is really handy because Vim's normal mode commands work nicely with line number counts. The line numbers change automatically to absolute line numbers when the window loses focus. That's accomplished by this bit in my .vimrc file:

autocmd FocusLost * if &relativenumber | set number | endif autocmd FocusGained * if &number | set relativenumber | endif

Here's a great explanation for why to switch between relative and absolute line numbers in vim.

Two: There's a very subtle light gray column at column 70 in the image. Usually it's at column 80, but I bumped it in to take the screenshot. That column helps remind me to keep my code lines short.

Three: There are a few plugins doing work behind the scenes. You can see one called powerline just under the main window. Powerline is a very handy status line. What you can't see in the image are other plugins like taglist that help when one is developing code. Vim also support syntax completion.

Four: Vim is very customizable, and it sometimes needs it. For example, I like the behavior from setting smartindent, except when it un-indents the # character to the first column of the line. In Python, the # character starts a comment, and I want it to remain in the column in which I put it. Here's what I've added to my .vimrc file to fix that issue:

set smartindent autocmd FileType python inoremap # X<c-h>#

Boo that Vim doesn't always do what you want out of the box. Yay that it always can be fixed to work the way you'd prefer.

Five: Vim lets you continue to undo actions from your previous sessions with the file, if you like. Isn't that an awesome option to have? If you want it, the option to set is:

set undofile

Far better than what I've written here, I strongly recommend Steve Losh's article, Coming Home to Vim. He's got a lot more experience with Vim, and he makes a compelling case for it.

It's a work-in-progress, but I'm willing to share what I've got so far in my .vimrc file. You'll see that I customize far more than I mention here in this article. Once it's stable, I may maintain it in a repository at github.

Entries

Entries